When using Laravel Vapor, it's possible to specify that your application uses multiple queues when configuring your environment.

id: 12345

name: my-project

environments:

production:

queue-concurrency: 100

queues:

- default

- invoices

- mail

When deploying a configuration like the example above, Vapor will provision three SQS queues (if they don't already exist) and set up an event mapping between each queue and the consuming Lambda function to ensure all jobs are processed.

All of the configured queues utilize the same Lambda function when processing jobs, which is generally since Lambda picks up all of the jobs almost instantly.

However, this means all queues share the function's configured concurrency, either set explicitly using the queue-concurrency option of the vapor.yml configuration file, as you can see in the example above or, by default, the overall account concurrency.

Note: You can read more about Lambda concurrency in this article.

If you have a busy queue that consumes a lot of your allowed concurrency, you may find that jobs from your other queues, or even HTTP requests, start to be throttled by AWS.

Thanks to a recent update to Lambda and SQS, we're happy to announce this is no longer an issue.

In short, it is now possible to set the concurrency of individual queues.

id: 12345

name: my-project

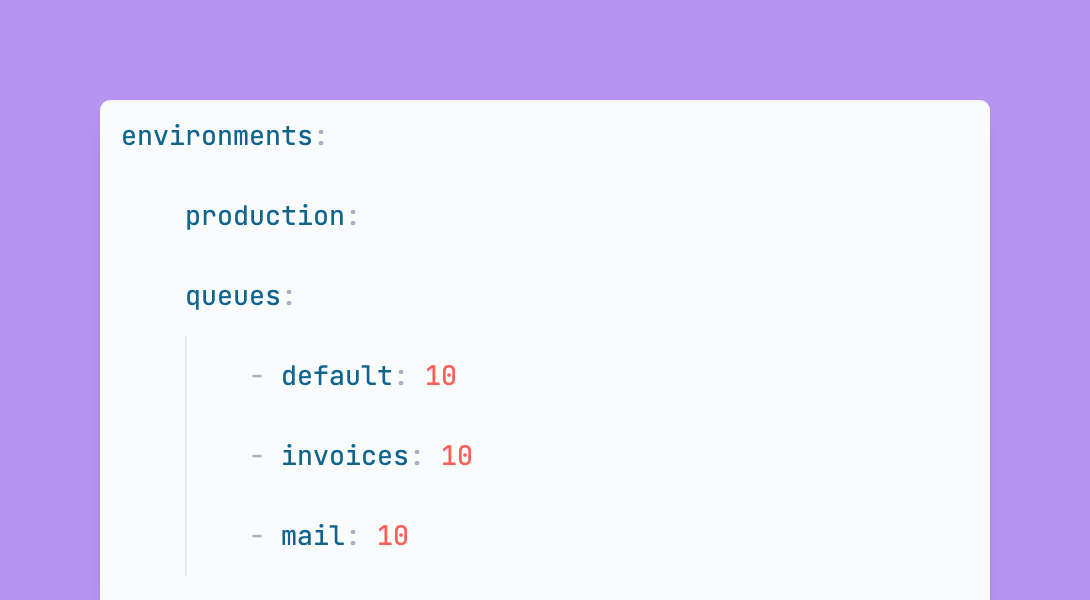

environments:

production:

queue-concurrency: 100

queues:

- default: 80

- invoices: 10

- mail: 10

Looking at the configuration above, you can see we're setting the overall queue function concurrency to 100 and distributing the available concurrency in our desired distribution between the three queues.

What is this doing under the hood?

Taking the default queue as an example, during the provisioning process, AWS will set a maximum concurrency of 80 on the mapping between SQS and Lambda.

In practice, if the queue function processes enough jobs for the default queue that it reaches the concurrency limit, rather than throttling the invocations, the Lambda function will stop reading from the queue until capacity is available. As such, the queue can no longer consume all the resources, and because no throttling takes place, the redrive policy does not come into effect, meaning SQS doesn't have to decide what to do with throttled jobs.

Vapor makes configuring individual queue concurrency a breeze. For example, imagine a scenario where we have a queue to process videos, with each job likely to use almost all of Lambda's maximum invocation time of 15 minutes. We want to keep this queue from going wild, as it could easily consume all available resources.

In this scenario, we may create a default queue with no concurrency limit set. We're happy jobs on this queue will get processed quickly and it's unlikely to hit the concurrency limit. We may also create a videos queue with a concurrency limit of 2 (the minimum allowed by AWS) because we're happy with the trade-off that these jobs will take longer to process, and safe in the knowledge that it will not take the rest of our environment down.

id: 12345

name: my-project

environments:

production:

queue-concurrency: 100

queues:

- default

- video: 2

Deploying the configuration above will do just that. It's that simple!

We at Vapor are committed to crafting the best serverless PHP experience, and we hope you agree this feature is another step in the right direction. We look forward to and welcome any feedback you may have.