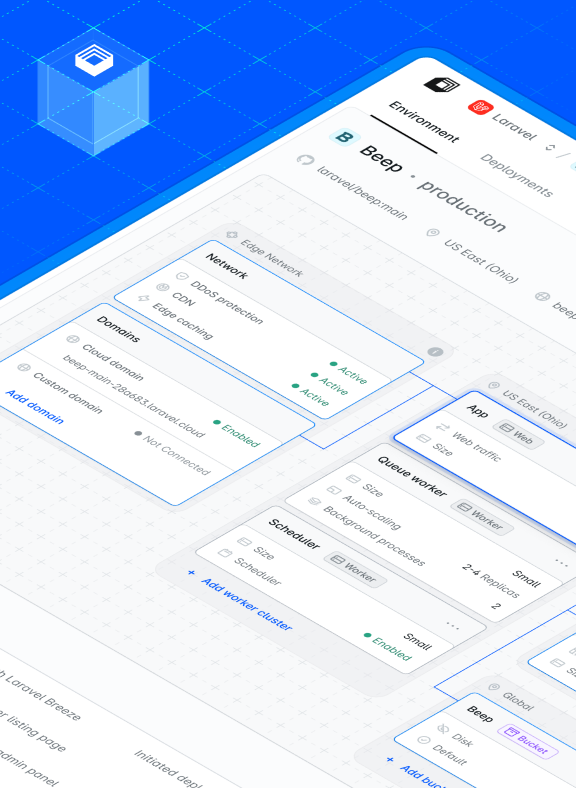

Laravel Vapor is a serverless deployment platform for Laravel, powered by AWS. With Vapor, you can quickly set up a serverless infrastructure that auto-scales with zero server maintenance.

Yet, while you can achieve blazing-fast load times in your Laravel applications powered by Vapor, you may face performance issues if you misconfigure your infrastructure.

In this article, we'll cover the Vapor most common infrastructure performance tips that may speed your Laravel applications powered by Vapor. Before we dive in, please keep in mind:

-

Optimize your application first: by making your application faster you may not require any infrastructure changes at all. Identify application performance bottlenecks and try to solve them using simple techniques such as caching, queuing, or database query optimizations.

-

Infrastructure changes may have an impact on your AWS bill: Of course, allocating more (or different) resources for your Vapor environment may cause your AWS bill to increase. Therefore, you may need to study how the pricing of that changes will affect your AWS bill: aws.amazon.com/pricing.

Alright, let's now talk about the most common infrastructure performance tips that can speed up your Vapor powered Laravel applications:

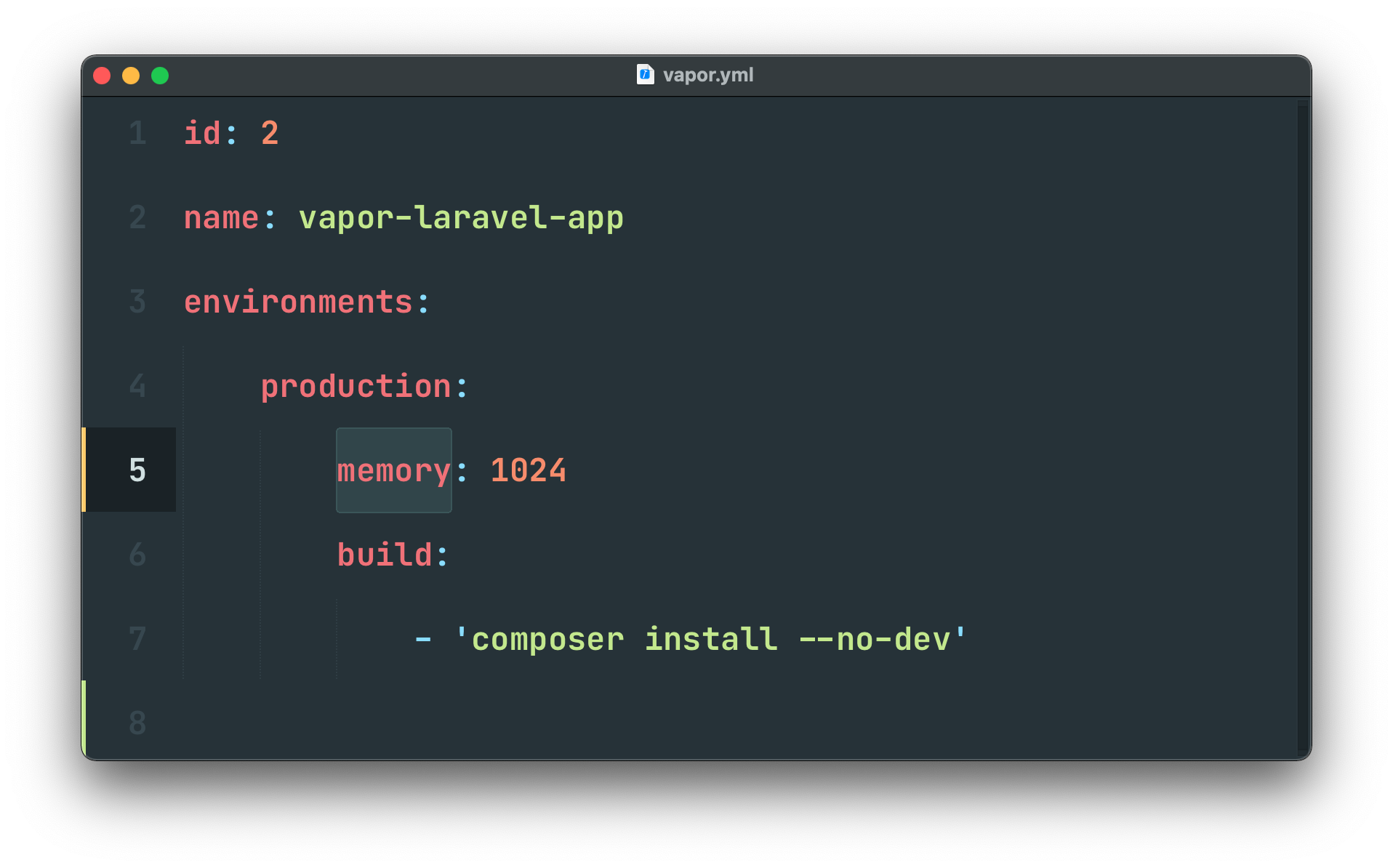

Increasing the memory option

Vapor - via AWS Lambda - allocates CPU power to your Lambda function in proportion to the amount of memory configured for the environment. Therefore, increasing the memory may lead to better performance as the Lambda function will have more CPU power to process your requests and queued jobs.

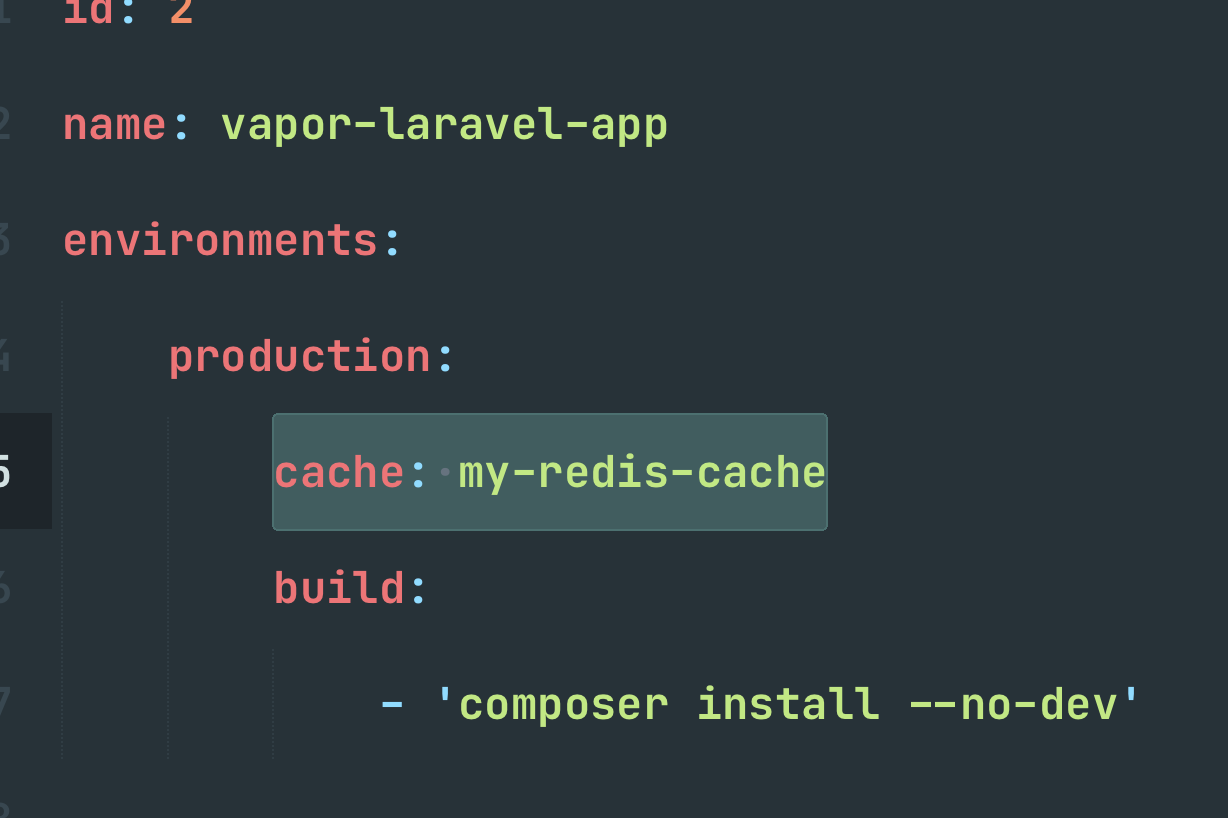

You may increase the configured memory for the HTTP Lambda function, Queue Lambda function, or CLI Lambda function using the memory, queue-memory, or cli-memory options, respectively, in your environment's vapor.yml configuration.

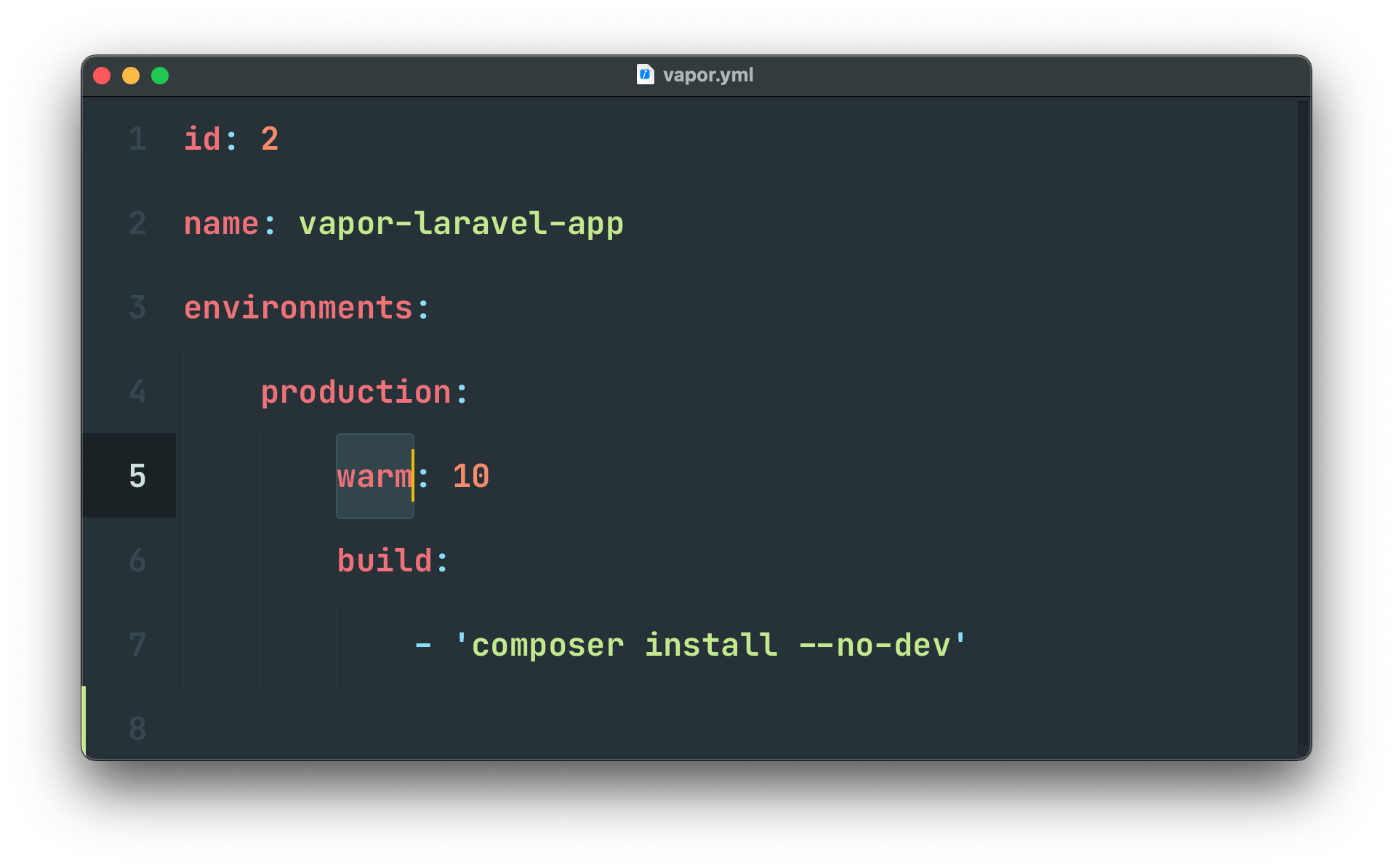

Increasing the warm option

If you are facing post-deployment performance issues at scale, that may indicate that your environment does not "pre-warm" enough containers in advance. Therefore, some requests incur a penalty of a few seconds while AWS loads a serverless container to process the request.

To mitigate this issue - commonly known as "cold-starts" - Vapor allows you to define a warm configuration value for an environment in your vapor.yml file. The warm value represents how many serverless containers Vapor will "pre-warm" so they can be ready to serve requests.

Using "fixed-size" databases

Serverless databases are auto-scaling databases that do not have a fixed amount of RAM or disk space. Yet, the "auto-scaling" mechanism offered by AWS can have cold start times of up to a few seconds every time your environment needs more database resources.

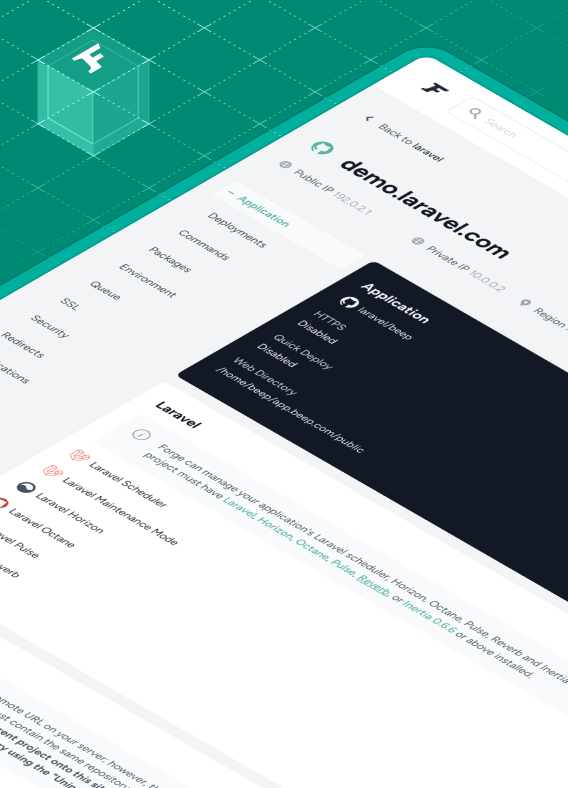

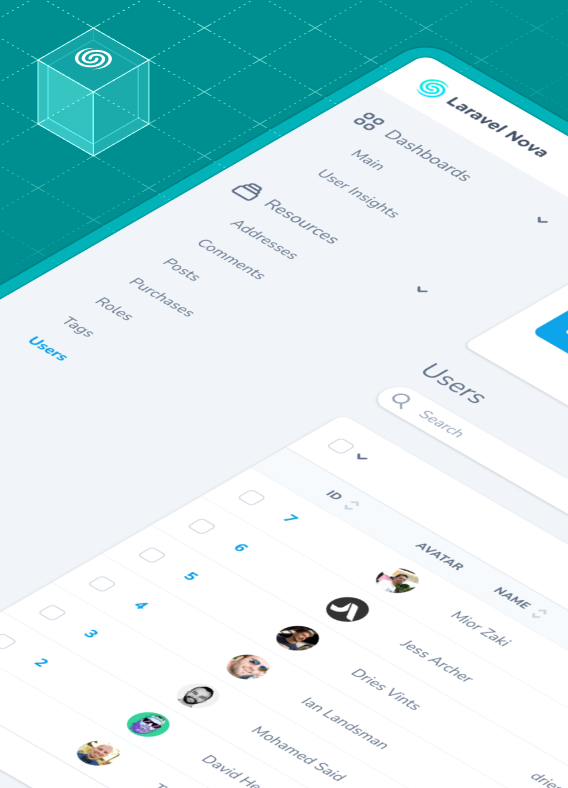

To mitigate this issue, consider using "fixed-size" databases where resources are prepared in advance. You may create "fixed-size" databases using the Vapor UI or using the database CLI command.

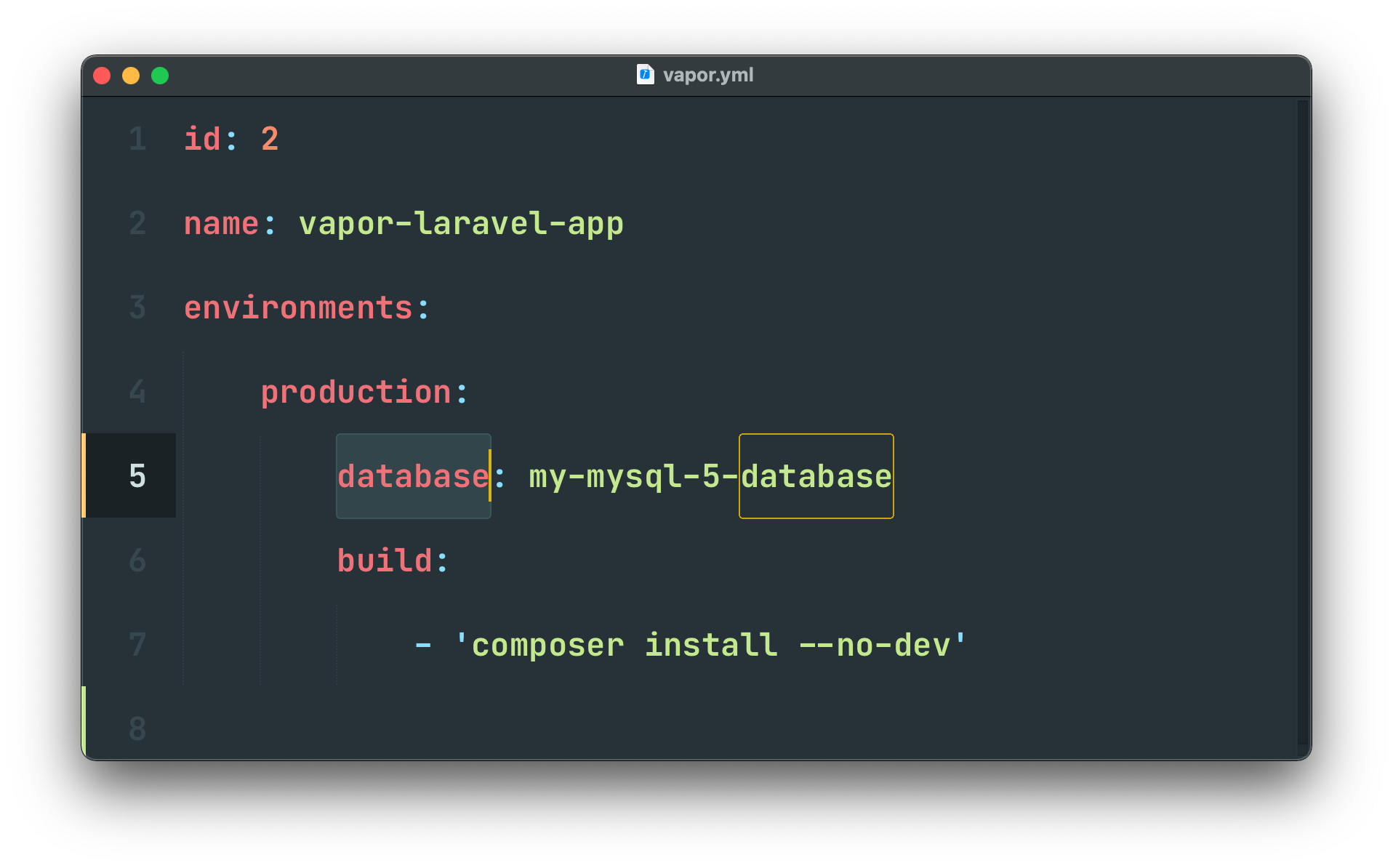

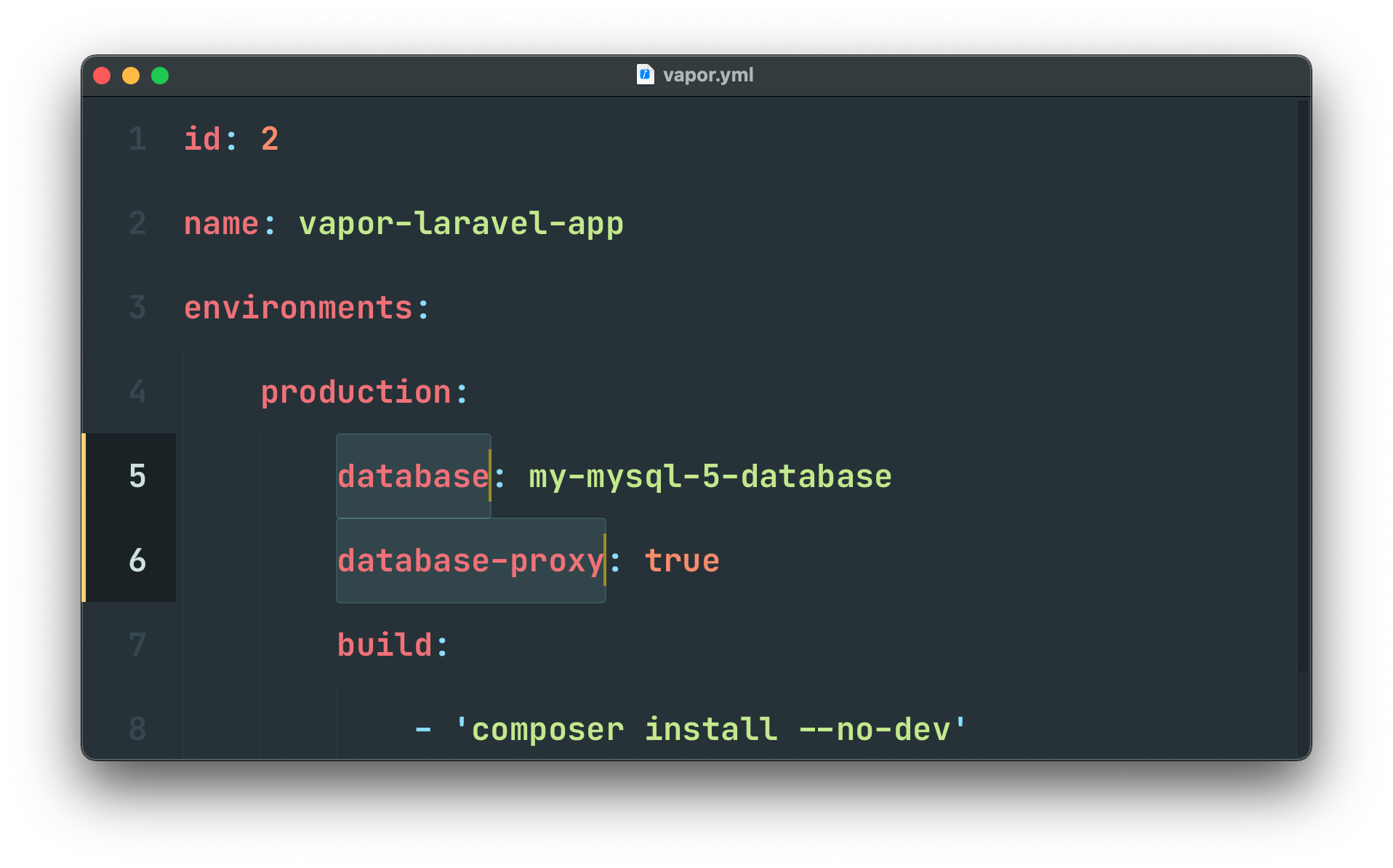

Using database proxies

Even though your serverless Laravel applications powered by Vapor can handle extreme amounts of web traffic, traditional relational databases such as MySQL can become overwhelmed and crash due to connection limit restrictions.

To mitigate this issue, Vapor allows the usage of an RDS proxy to efficiently manage your database connections and allow many more connections than would typically be possible. The database proxy can be added via the Vapor UI or the database:proxy CLI command.

Scale existing "fixed-size" resources

If you are facing performance issues at scale, that may indicate that existing resources - such as "fixed-size" databases or "fixed-size" caches - may not be able to keep up with the number of tasks managed by your application.

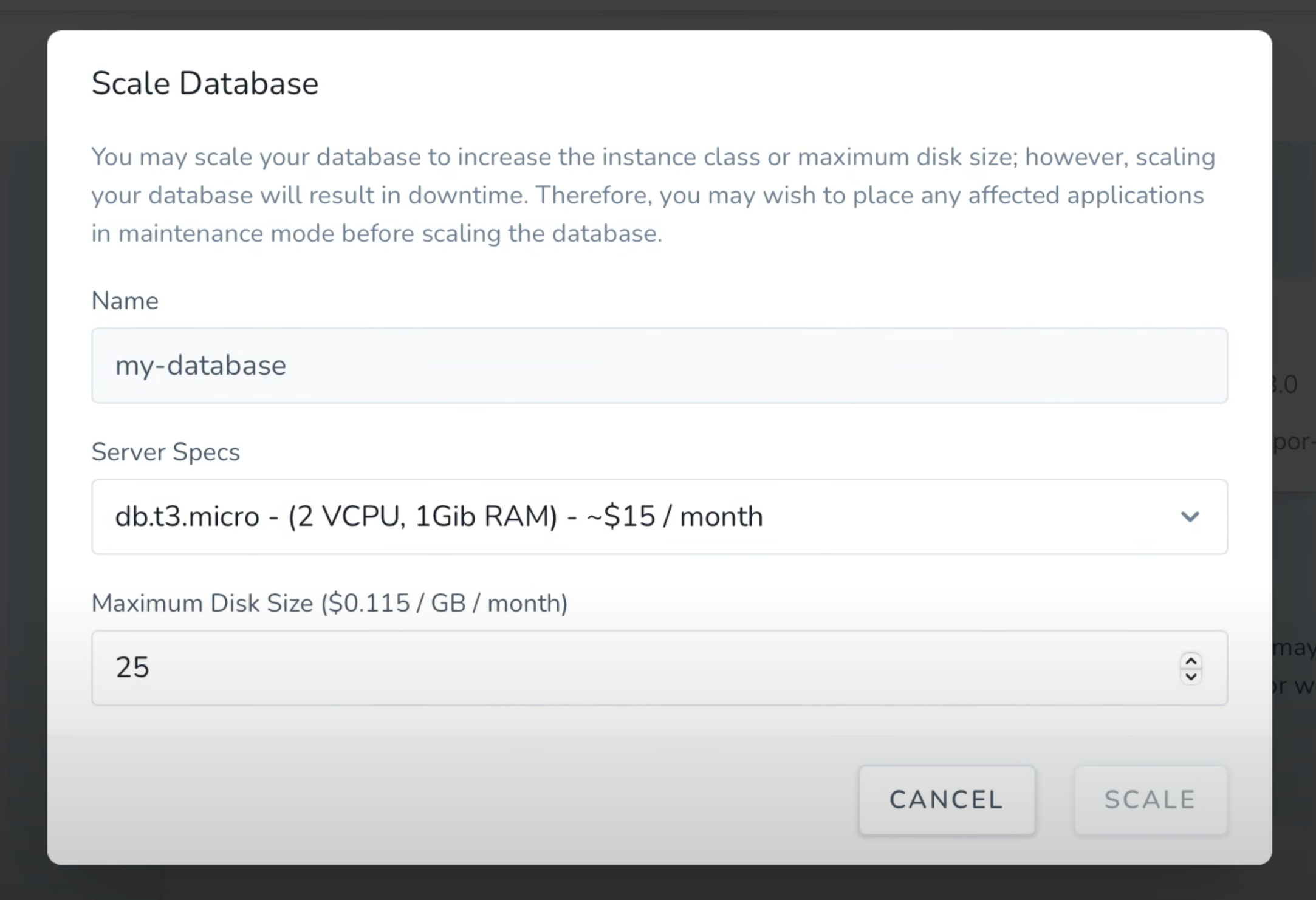

To mitigate this issue, consider scale existing "fixed-size" resources, if any. For example, you may scale "fixed-size" databases via the Vapor UI's database detail screen or the database:scale CLI command.

Consider Redis over DynamoDB

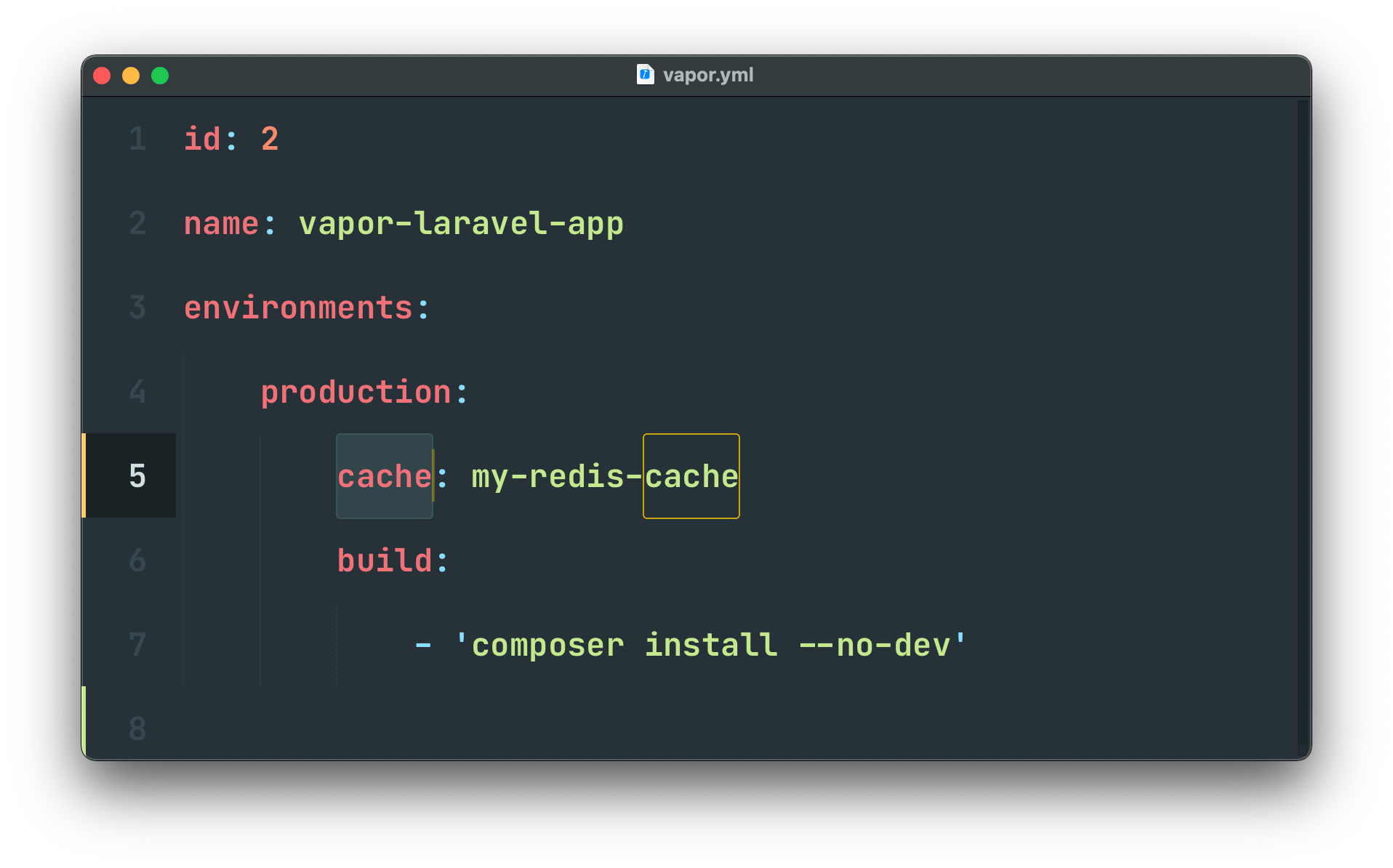

If your application heavily relies on caching - which is already a great way to massively speed up your application - using a Redis cache instead of DynamoDB can speed up cache IO.

You may create caches using the Vapor UI or using the cache CLI command.

Consider API Gateway v2 instead of API Gateway v1

By migrating from API Gateway v1 to API Gateway v2, you can expect 50% less latency in the requests to your application. However, API Gateway v2 is regional, meaning that customers from a region very distant from your project may be negatively affected by this change. Also, some features, like Vapor's managed Firewall, may not be available.

If you would like to use API Gateway 2.0, you may specify gateway-version: 2 in your environment's vapor.yml configuration.

Contact AWS Support

Laravel Vapor provisions and configures your projects on the AWS infrastructure. However, for performance insights regarding your infrastructure, we recommend you to reach out to AWS Support as they can deeply examine the internal logs of your infrastructure.

Conclusion

We hope you enjoy this article about Laravel Vapor most common infrastructure performance tips. At Laravel, we're committed to providing you with the most robust and developer-friendly PHP experience in the world. If you haven't checked out Vapor, now is a great time to start! You can create your account today at: vapor.laravel.com.